通过解析robots.txt,解析sitemap,并爬取业务

激活网站Full Page Cache, 为网站进行全面预热

package main

import (

"flag"

"fmt"

"strings"

"net/http"

"net/http/httptrace"

"io/ioutil"

"github.com/beevik/etree"

"time"

"path"

)

var (

// URL 等必要参数定义

URL string = "https://www.baidu.com" // URL website

sleeptimes int64 = 100 // request sleep times

)

func init() {

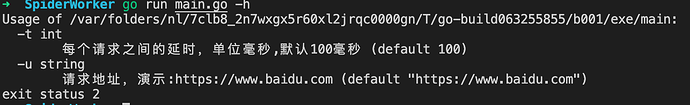

flag.StringVar(&URL,"u",URL,"主域名链接地址,参数演示:https://www.baidu.com")

flag.Int64Var(&sleeptimes,"t",sleeptimes,"每个请求之间的延时,单位毫秒,默认100毫秒")

flag.Parse()

}

// GetRobotsTxt .

func GetRobotsTxt(robotsURL string) (RobotsTxt string) {

resp,err := http.Get(robotsURL)

if err != nil{

panic(err)

}

if resp.StatusCode != 200 {

panic("不能访问robots.txt,请检查robots文件是否存在.")

}

defer resp.Body.Close()

body, err := ioutil.ReadAll(resp.Body)

if err != nil{

panic(err)

}

return string(body)

}

// GetURLsByURL .

func GetURLsByURL(robotsURL string) {

if strings.Contains(robotsURL, "//robots.txt") {

robotsURL = strings.Replace(robotsURL,"//robots.txt","/robots.txt",1)

}

URLsMap := make([]string, 0)

robotctx := GetRobotsTxt(robotsURL)

strarr := strings.Split(robotctx,"\n")

for _, str := range strarr{

if strings.Contains(str, "Sitemap") {

URL := strings.Split(str,": ")

if path.Ext(URL[1]) == ".xml"{

URLsMap = append(URLsMap, URL[1])

}else{

fmt.Println("获取到的SiteMap后缀为: ",path.Ext(URL[1]))

panic("与预期不符,请检查SiteMap文件格式")

}

}

}

if URLsMap == nil{

panic("未获取到Sitemap地址")

}

fmt.Println("找到Sitemap: ")

fmt.Println(URLsMap)

fmt.Println("开始读取XML: ")

for _, XMLURL := range URLsMap{

ReadXML(XMLURL)

}

}

// ReadXML to chan.

func ReadXML(XMLURL string) {

response, err := http.Get(XMLURL)

if err != nil {

fmt.Println(err)

}

defer response.Body.Close()

//Convert the body to array of uint8

responseData, err := ioutil.ReadAll(response.Body)

if err != nil {

fmt.Println(err)

}

// Convert the body to a string

responseString:= string(responseData)

responseString = strings.TrimSpace(responseString)

// Print the string

// fmt.Println("\nPrinting the xml response as a plain string: ")

// fmt.Println(responseString)

doc := etree.NewDocument()

if err := doc.ReadFromString(responseString);err != nil{

panic(err)

}

if SitemapIndex := doc.SelectElement("sitemapindex"); SitemapIndex != nil{

for _, URL := range SitemapIndex.SelectElements("sitemap"){

if loc := URL.SelectElement("loc"); loc != nil{

ReadXML(loc.Text())

fmt.Println("找到sitemap文件: ",loc.Text())

}

}

}else{

urlsets := doc.SelectElement("urlset")

for _, urls := range urlsets.SelectElements("url"){

if loc := urls.SelectElement("loc"); loc != nil{

urlsSetMap = append(urlsSetMap, loc.Text())

}

}

}

}

func visit(url string, sleeptimes int64) {

req, _ := http.NewRequest("GET", url, nil)

var start time.Time

trace := &httptrace.ClientTrace{

GotFirstResponseByte: func() {

//fmt.Printf("Time from start to first byte: %v\n", time.Since(start))

ttfbTime := time.Duration(time.Since(start))

ttfbs = append(ttfbs,ttfbTime)

//fmt.Println(ttfbTime)

//fmt.Printf("TTFB: %v\n", time.Since(start))

},

}

req = req.WithContext(httptrace.WithClientTrace(req.Context(), trace))

start = time.Now()

if _, err := http.DefaultTransport.RoundTrip(req); err != nil {

panic(err)

}

time.Sleep(time.Duration(sleeptimes) * time.Millisecond)

//fmt.Printf("TTFB: %v\n", time.Since(start))

}

func compute()(allTime,averageTime time.Duration) {

var all time.Duration

for _, ttfb := range ttfbs{

all += ttfb

}

allTime = time.Duration(all) / time.Second

alls := int(all)

averageTime = time.Duration(alls/len(urlsSetMap)) / time.Millisecond

return

}

var urlsSetMap []string

var ttfbs []time.Duration

func main() {

//get robots.txt

starttime := time.Now()

robotsURL := strings.Join([]string{URL,"/robots.txt"},"")

GetURLsByURL(robotsURL)

// urlsset:

fmt.Printf("一共找到了 %d 个页面, 正在请求,请稍等\n",len(urlsSetMap))

//printSlice(urlsSetMap)

if len(urlsSetMap) > 1 {

for _, url := range urlsSetMap{

visit(url,sleeptimes)

}

endtime := time.Now()

fmt.Println("程序总耗时: ",endtime.Sub(starttime))

allTime,averageTime := compute()

fmt.Printf("总TTFB约耗时: %d 秒,平均TTFB约时间: %d 毫秒.",allTime, averageTime)

}else{

fmt.Println("[ERROR]目标未使用标准的sitemap格式,请人工检查")

}

}

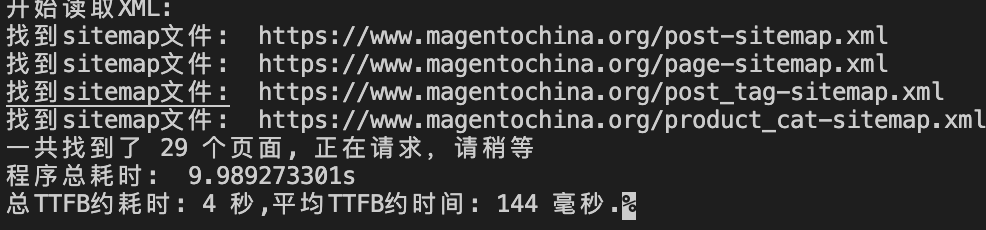

结果:

简单的脚本未考虑waf cdn等中间件的限制,如果你的网站有这些。可以通过修改hosts的方式进行绕过。